This is the multi-page printable view of this section. Click here to print.

Cloud Platforms

1 - AWS

Official AMI Images

Official AMI image ID can be found in the cloud-images.json file attached to the Talos release:

curl -sL https://github.com/siderolabs/talos/releases/download/v0.13.0/cloud-images.json | \

jq -r '.[] | select(.region == "us-east-1") | select (.arch == "amd64") | .id'

Replace us-east-1 and amd64 in the line above with the desired region and architecture.

Creating a Cluster via the AWS CLI

In this guide we will create an HA Kubernetes cluster with 3 worker nodes. We assume an existing VPC, and some familiarity with AWS. If you need more information on AWS specifics, please see the official AWS documentation.

Create the Subnet

aws ec2 create-subnet \

--region $REGION \

--vpc-id $VPC \

--cidr-block ${CIDR_BLOCK}

Create the AMI

Prepare the Import Prerequisites

Create the S3 Bucket

aws s3api create-bucket \

--bucket $BUCKET \

--create-bucket-configuration LocationConstraint=$REGION \

--acl private

Create the vmimport Role

In order to create an AMI, ensure that the vmimport role exists as described in the official AWS documentation.

Note that the role should be associated with the S3 bucket we created above.

Create the Image Snapshot

First, download the AWS image from a Talos release:

curl -LO https://github.com/talos-systems/talos/releases/latest/download/aws-amd64.tar.gz | tar -xv

Copy the RAW disk to S3 and import it as a snapshot:

aws s3 cp disk.raw s3://$BUCKET/talos-aws-tutorial.raw

aws ec2 import-snapshot \

--region $REGION \

--description "Talos kubernetes tutorial" \

--disk-container "Format=raw,UserBucket={S3Bucket=$BUCKET,S3Key=talos-aws-tutorial.raw}"

Save the SnapshotId, as we will need it once the import is done.

To check on the status of the import, run:

aws ec2 describe-import-snapshot-tasks \

--region $REGION \

--import-task-ids

Once the SnapshotTaskDetail.Status indicates completed, we can register the image.

Register the Image

aws ec2 register-image \

--region $REGION \

--block-device-mappings "DeviceName=/dev/xvda,VirtualName=talos,Ebs={DeleteOnTermination=true,SnapshotId=$SNAPSHOT,VolumeSize=4,VolumeType=gp2}" \

--root-device-name /dev/xvda \

--virtualization-type hvm \

--architecture x86_64 \

--ena-support \

--name talos-aws-tutorial-ami

We now have an AMI we can use to create our cluster. Save the AMI ID, as we will need it when we create EC2 instances.

Create a Security Group

aws ec2 create-security-group \

--region $REGION \

--group-name talos-aws-tutorial-sg \

--description "Security Group for EC2 instances to allow ports required by Talos"

Using the security group ID from above, allow all internal traffic within the same security group:

aws ec2 authorize-security-group-ingress \

--region $REGION \

--group-name talos-aws-tutorial-sg \

--protocol all \

--port 0 \

--source-group $SECURITY_GROUP

and expose the Talos and Kubernetes APIs:

aws ec2 authorize-security-group-ingress \

--region $REGION \

--group-name talos-aws-tutorial-sg \

--protocol tcp \

--port 6443 \

--cidr 0.0.0.0/0

aws ec2 authorize-security-group-ingress \

--region $REGION \

--group-name talos-aws-tutorial-sg \

--protocol tcp \

--port 50000-50001 \

--cidr 0.0.0.0/0

Create a Load Balancer

aws elbv2 create-load-balancer \

--region $REGION \

--name talos-aws-tutorial-lb \

--type network --subnets $SUBNET

Take note of the DNS name and ARN. We will need these soon.

Create the Machine Configuration Files

Generating Base Configurations

Using the DNS name of the loadbalancer created earlier, generate the base configuration files for the Talos machines:

$ talosctl gen config talos-k8s-aws-tutorial https://<load balancer IP or DNS>:<port> --with-examples=false --with-docs=false

created controlplane.yaml

created worker.yaml

created talosconfig

Take note that the generated configs are too long for AWS userdata field if the --with-examples and --with-docs flags are not passed.

At this point, you can modify the generated configs to your liking.

Optionally, you can specify --config-patch with RFC6902 jsonpatch which will be applied during the config generation.

Validate the Configuration Files

$ talosctl validate --config controlplane.yaml --mode cloud

controlplane.yaml is valid for cloud mode

$ talosctl validate --config worker.yaml --mode cloud

worker.yaml is valid for cloud mode

Create the EC2 Instances

Note: There is a known issue that prevents Talos from running on T2 instance types. Please use T3 if you need burstable instance types.

Create the Control Plane Nodes

CP_COUNT=1

while [[ "$CP_COUNT" -lt 4 ]]; do

aws ec2 run-instances \

--region $REGION \

--image-id $AMI \

--count 1 \

--instance-type t3.small \

--user-data file://controlplane.yaml \

--subnet-id $SUBNET \

--security-group-ids $SECURITY_GROUP \

--associate-public-ip-address \

--tag-specifications "ResourceType=instance,Tags=[{Key=Name,Value=talos-aws-tutorial-cp-$CP_COUNT}]"

((CP_COUNT++))

done

Make a note of the resulting

PrivateIpAddressfrom the init and controlplane nodes for later use.

Create the Worker Nodes

aws ec2 run-instances \

--region $REGION \

--image-id $AMI \

--count 3 \

--instance-type t3.small \

--user-data file://worker.yaml \

--subnet-id $SUBNET \

--security-group-ids $SECURITY_GROUP

--tag-specifications "ResourceType=instance,Tags=[{Key=Name,Value=talos-aws-tutorial-worker}]"

Configure the Load Balancer

aws elbv2 create-target-group \

--region $REGION \

--name talos-aws-tutorial-tg \

--protocol TCP \

--port 6443 \

--target-type ip \

--vpc-id $VPC

Now, using the target group’s ARN, and the PrivateIpAddress from the instances that you created :

aws elbv2 register-targets \

--region $REGION \

--target-group-arn $TARGET_GROUP_ARN \

--targets Id=$CP_NODE_1_IP Id=$CP_NODE_2_IP Id=$CP_NODE_3_IP

Using the ARNs of the load balancer and target group from previous steps, create the listener:

aws elbv2 create-listener \

--region $REGION \

--load-balancer-arn $LOAD_BALANCER_ARN \

--protocol TCP \

--port 443 \

--default-actions Type=forward,TargetGroupArn=$TARGET_GROUP_ARN

Bootstrap Etcd

Set the endpoints and nodes:

talosctl --talosconfig talosconfig config endpoint <control plane 1 IP>

talosctl --talosconfig talosconfig config node <control plane 1 IP>

Bootstrap etcd:

talosctl --talosconfig talosconfig bootstrap

Retrieve the kubeconfig

At this point we can retrieve the admin kubeconfig by running:

talosctl --talosconfig talosconfig kubeconfig .

2 - Azure

Creating a Cluster via the CLI

In this guide we will create an HA Kubernetes cluster with 1 worker node. We assume existing Blob Storage, and some familiarity with Azure. If you need more information on Azure specifics, please see the official Azure documentation.

Environment Setup

We’ll make use of the following environment variables throughout the setup. Edit the variables below with your correct information.

# Storage account to use

export STORAGE_ACCOUNT="StorageAccountName"

# Storage container to upload to

export STORAGE_CONTAINER="StorageContainerName"

# Resource group name

export GROUP="ResourceGroupName"

# Location

export LOCATION="centralus"

# Get storage account connection string based on info above

export CONNECTION=$(az storage account show-connection-string \

-n $STORAGE_ACCOUNT \

-g $GROUP \

-o tsv)

Create the Image

First, download the Azure image from a Talos release.

Once downloaded, untar with tar -xvf /path/to/azure-amd64.tar.gz

Upload the VHD

Once you have pulled down the image, you can upload it to blob storage with:

az storage blob upload \

--connection-string $CONNECTION \

--container-name $STORAGE_CONTAINER \

-f /path/to/extracted/talos-azure.vhd \

-n talos-azure.vhd

Register the Image

Now that the image is present in our blob storage, we’ll register it.

az image create \

--name talos \

--source https://$STORAGE_ACCOUNT.blob.core.windows.net/$STORAGE_CONTAINER/talos-azure.vhd \

--os-type linux \

-g $GROUP

Network Infrastructure

Virtual Networks and Security Groups

Once the image is prepared, we’ll want to work through setting up the network. Issue the following to create a network security group and add rules to it.

# Create vnet

az network vnet create \

--resource-group $GROUP \

--location $LOCATION \

--name talos-vnet \

--subnet-name talos-subnet

# Create network security group

az network nsg create -g $GROUP -n talos-sg

# Client -> apid

az network nsg rule create \

-g $GROUP \

--nsg-name talos-sg \

-n apid \

--priority 1001 \

--destination-port-ranges 50000 \

--direction inbound

# Trustd

az network nsg rule create \

-g $GROUP \

--nsg-name talos-sg \

-n trustd \

--priority 1002 \

--destination-port-ranges 50001 \

--direction inbound

# etcd

az network nsg rule create \

-g $GROUP \

--nsg-name talos-sg \

-n etcd \

--priority 1003 \

--destination-port-ranges 2379-2380 \

--direction inbound

# Kubernetes API Server

az network nsg rule create \

-g $GROUP \

--nsg-name talos-sg \

-n kube \

--priority 1004 \

--destination-port-ranges 6443 \

--direction inbound

Load Balancer

We will create a public ip, load balancer, and a health check that we will use for our control plane.

# Create public ip

az network public-ip create \

--resource-group $GROUP \

--name talos-public-ip \

--allocation-method static

# Create lb

az network lb create \

--resource-group $GROUP \

--name talos-lb \

--public-ip-address talos-public-ip \

--frontend-ip-name talos-fe \

--backend-pool-name talos-be-pool

# Create health check

az network lb probe create \

--resource-group $GROUP \

--lb-name talos-lb \

--name talos-lb-health \

--protocol tcp \

--port 6443

# Create lb rule for 6443

az network lb rule create \

--resource-group $GROUP \

--lb-name talos-lb \

--name talos-6443 \

--protocol tcp \

--frontend-ip-name talos-fe \

--frontend-port 6443 \

--backend-pool-name talos-be-pool \

--backend-port 6443 \

--probe-name talos-lb-health

Network Interfaces

In Azure, we have to pre-create the NICs for our control plane so that they can be associated with our load balancer.

for i in $( seq 0 1 2 ); do

# Create public IP for each nic

az network public-ip create \

--resource-group $GROUP \

--name talos-controlplane-public-ip-$i \

--allocation-method static

# Create nic

az network nic create \

--resource-group $GROUP \

--name talos-controlplane-nic-$i \

--vnet-name talos-vnet \

--subnet talos-subnet \

--network-security-group talos-sg \

--public-ip-address talos-controlplane-public-ip-$i\

--lb-name talos-lb \

--lb-address-pools talos-be-pool

done

Cluster Configuration

With our networking bits setup, we’ll fetch the IP for our load balancer and create our configuration files.

LB_PUBLIC_IP=$(az network public-ip show \

--resource-group $GROUP \

--name talos-public-ip \

--query [ipAddress] \

--output tsv)

talosctl gen config talos-k8s-azure-tutorial https://${LB_PUBLIC_IP}:6443

Compute Creation

We are now ready to create our azure nodes.

# Create availability set

az vm availability-set create \

--name talos-controlplane-av-set \

-g $GROUP

# Create the controlplane nodes

for i in $( seq 0 1 2 ); do

az vm create \

--name talos-controlplane-$i \

--image talos \

--custom-data ./controlplane.yaml \

-g $GROUP \

--admin-username talos \

--generate-ssh-keys \

--verbose \

--boot-diagnostics-storage $STORAGE_ACCOUNT \

--os-disk-size-gb 20 \

--nics talos-controlplane-nic-$i \

--availability-set talos-controlplane-av-set \

--no-wait

done

# Create worker node

az vm create \

--name talos-worker-0 \

--image talos \

--vnet-name talos-vnet \

--subnet talos-subnet \

--custom-data ./worker.yaml \

-g $GROUP \

--admin-username talos \

--generate-ssh-keys \

--verbose \

--boot-diagnostics-storage $STORAGE_ACCOUNT \

--nsg talos-sg \

--os-disk-size-gb 20 \

--no-wait

# NOTES:

# `--admin-username` and `--generate-ssh-keys` are required by the az cli,

# but are not actually used by talos

# `--os-disk-size-gb` is the backing disk for Kubernetes and any workload containers

# `--boot-diagnostics-storage` is to enable console output which may be necessary

# for troubleshooting

Bootstrap Etcd

You should now be able to interact with your cluster with talosctl.

We will need to discover the public IP for our first control plane node first.

CONTROL_PLANE_0_IP=$(az network public-ip show \

--resource-group $GROUP \

--name talos-controlplane-public-ip-0 \

--query [ipAddress] \

--output tsv)

Set the endpoints and nodes:

talosctl --talosconfig talosconfig config endpoint $CONTROL_PLANE_0_IP

talosctl --talosconfig talosconfig config node $CONTROL_PLANE_0_IP

Bootstrap etcd:

talosctl --talosconfig talosconfig bootstrap

Retrieve the kubeconfig

At this point we can retrieve the admin kubeconfig by running:

talosctl --talosconfig talosconfig kubeconfig .

3 - DigitalOcean

Creating a Cluster via the CLI

In this guide we will create an HA Kubernetes cluster with 1 worker node. We assume an existing Space, and some familiarity with DigitalOcean. If you need more information on DigitalOcean specifics, please see the official DigitalOcean documentation.

Create the Image

First, download the DigitalOcean image from a Talos release.

Extract the archive to get the disk.raw file, compress it using gzip to disk.raw.gz.

Using an upload method of your choice (doctl does not have Spaces support), upload the image to a space.

Now, create an image using the URL of the uploaded image:

doctl compute image create \

--region $REGION \

--image-description talos-digital-ocean-tutorial \

--image-url https://talos-tutorial.$REGION.digitaloceanspaces.com/disk.raw.gz \

Talos

Save the image ID. We will need it when creating droplets.

Create a Load Balancer

doctl compute load-balancer create \

--region $REGION \

--name talos-digital-ocean-tutorial-lb \

--tag-name talos-digital-ocean-tutorial-control-plane \

--health-check protocol:tcp,port:6443,check_interval_seconds:10,response_timeout_seconds:5,healthy_threshold:5,unhealthy_threshold:3 \

--forwarding-rules entry_protocol:tcp,entry_port:443,target_protocol:tcp,target_port:6443

We will need the IP of the load balancer. Using the ID of the load balancer, run:

doctl compute load-balancer get --format IP <load balancer ID>

Save it, as we will need it in the next step.

Create the Machine Configuration Files

Generating Base Configurations

Using the DNS name of the loadbalancer created earlier, generate the base configuration files for the Talos machines:

$ talosctl gen config talos-k8s-digital-ocean-tutorial https://<load balancer IP or DNS>:<port>

created controlplane.yaml

created worker.yaml

created talosconfig

At this point, you can modify the generated configs to your liking.

Optionally, you can specify --config-patch with RFC6902 jsonpatch which will be applied during the config generation.

Validate the Configuration Files

$ talosctl validate --config controlplane.yaml --mode cloud

controlplane.yaml is valid for cloud mode

$ talosctl validate --config worker.yaml --mode cloud

worker.yaml is valid for cloud mode

Create the Droplets

Create the Control Plane Nodes

Run the following twice, to give ourselves three total control plane nodes:

doctl compute droplet create \

--region $REGION \

--image <image ID> \

--size s-2vcpu-4gb \

--enable-private-networking \

--tag-names talos-digital-ocean-tutorial-control-plane \

--user-data-file controlplane.yaml \

--ssh-keys <ssh key fingerprint> \

talos-control-plane-1

doctl compute droplet create \

--region $REGION \

--image <image ID> \

--size s-2vcpu-4gb \

--enable-private-networking \

--tag-names talos-digital-ocean-tutorial-control-plane \

--user-data-file controlplane.yaml \

--ssh-keys <ssh key fingerprint> \

talos-control-plane-2

doctl compute droplet create \

--region $REGION \

--image <image ID> \

--size s-2vcpu-4gb \

--enable-private-networking \

--tag-names talos-digital-ocean-tutorial-control-plane \

--user-data-file controlplane.yaml \

--ssh-keys <ssh key fingerprint> \

talos-control-plane-3

Note: Although SSH is not used by Talos, DigitalOcean still requires that an SSH key be associated with the droplet. Create a dummy key that can be used to satisfy this requirement.

Create the Worker Nodes

Run the following to create a worker node:

doctl compute droplet create \

--region $REGION \

--image <image ID> \

--size s-2vcpu-4gb \

--enable-private-networking \

--user-data-file worker.yaml \

--ssh-keys <ssh key fingerprint> \

talos-worker-1

Bootstrap Etcd

To configure talosctl we will need the first control plane node’s IP:

doctl compute droplet get --format PublicIPv4 <droplet ID>

Set the endpoints and nodes:

talosctl --talosconfig talosconfig config endpoint <control plane 1 IP>

talosctl --talosconfig talosconfig config node <control plane 1 IP>

Bootstrap etcd:

talosctl --talosconfig talosconfig bootstrap

Retrieve the kubeconfig

At this point we can retrieve the admin kubeconfig by running:

talosctl --talosconfig talosconfig kubeconfig .

4 - GCP

Creating a Cluster via the CLI

In this guide, we will create an HA Kubernetes cluster in GCP with 1 worker node. We will assume an existing Cloud Storage bucket, and some familiarity with Google Cloud. If you need more information on Google Cloud specifics, please see the official Google documentation.

Environment Setup

We’ll make use of the following environment variables throughout the setup. Edit the variables below with your correct information.

# Storage account to use

export STORAGE_BUCKET="StorageBucketName"

# Region

export REGION="us-central1"

Create the Image

First, download the Google Cloud image from a Talos release.

These images are called gcp-$ARCH.tar.gz.

Upload the Image

Once you have downloaded the image, you can upload it to your storage bucket with:

gsutil cp /path/to/gcp-amd64.tar.gz gs://$STORAGE_BUCKET

Register the image

Now that the image is present in our bucket, we’ll register it.

gcloud compute images create talos \

--source-uri=gs://$STORAGE_BUCKET/gcp-amd64.tar.gz \

--guest-os-features=VIRTIO_SCSI_MULTIQUEUE

Network Infrastructure

Load Balancers and Firewalls

Once the image is prepared, we’ll want to work through setting up the network. Issue the following to create a firewall, load balancer, and their required components.

# Create Instance Group

gcloud compute instance-groups unmanaged create talos-ig \

--zone $REGION-b

# Create port for IG

gcloud compute instance-groups set-named-ports talos-ig \

--named-ports tcp6443:6443 \

--zone $REGION-b

# Create health check

gcloud compute health-checks create tcp talos-health-check --port 6443

# Create backend

gcloud compute backend-services create talos-be \

--global \

--protocol TCP \

--health-checks talos-health-check \

--timeout 5m \

--port-name tcp6443

# Add instance group to backend

gcloud compute backend-services add-backend talos-be \

--global \

--instance-group talos-ig \

--instance-group-zone $REGION-b

# Create tcp proxy

gcloud compute target-tcp-proxies create talos-tcp-proxy \

--backend-service talos-be \

--proxy-header NONE

# Create LB IP

gcloud compute addresses create talos-lb-ip --global

# Forward 443 from LB IP to tcp proxy

gcloud compute forwarding-rules create talos-fwd-rule \

--global \

--ports 443 \

--address talos-lb-ip \

--target-tcp-proxy talos-tcp-proxy

# Create firewall rule for health checks

gcloud compute firewall-rules create talos-controlplane-firewall \

--source-ranges 130.211.0.0/22,35.191.0.0/16 \

--target-tags talos-controlplane \

--allow tcp:6443

# Create firewall rule to allow talosctl access

gcloud compute firewall-rules create talos-controlplane-talosctl \

--source-ranges 0.0.0.0/0 \

--target-tags talos-controlplane \

--allow tcp:50000

Cluster Configuration

With our networking bits setup, we’ll fetch the IP for our load balancer and create our configuration files.

LB_PUBLIC_IP=$(gcloud compute forwarding-rules describe talos-fwd-rule \

--global \

--format json \

| jq -r .IPAddress)

talosctl gen config talos-k8s-gcp-tutorial https://${LB_PUBLIC_IP}:443

Additionally, you can specify --config-patch with RFC6902 jsonpatch which will be applied during the config generation.

Compute Creation

We are now ready to create our GCP nodes.

# Create the control plane nodes.

for i in $( seq 1 3 ); do

gcloud compute instances create talos-controlplane-$i \

--image talos \

--zone $REGION-b \

--tags talos-controlplane \

--boot-disk-size 20GB \

--metadata-from-file=user-data=./controlplane.yaml

done

# Add control plane nodes to instance group

for i in $( seq 0 1 3 ); do

gcloud compute instance-groups unmanaged add-instances talos-ig \

--zone $REGION-b \

--instances talos-controlplane-$i

done

# Create worker

gcloud compute instances create talos-worker-0 \

--image talos \

--zone $REGION-b \

--boot-disk-size 20GB \

--metadata-from-file=user-data=./worker.yaml

Bootstrap Etcd

You should now be able to interact with your cluster with talosctl.

We will need to discover the public IP for our first control plane node first.

CONTROL_PLANE_0_IP=$(gcloud compute instances describe talos-controlplane-0 \

--zone $REGION-b \

--format json \

| jq -r '.networkInterfaces[0].accessConfigs[0].natIP')

Set the endpoints and nodes:

talosctl --talosconfig talosconfig config endpoint $CONTROL_PLANE_0_IP

talosctl --talosconfig talosconfig config node $CONTROL_PLANE_0_IP

Bootstrap etcd:

talosctl --talosconfig talosconfig bootstrap

Retrieve the kubeconfig

At this point we can retrieve the admin kubeconfig by running:

talosctl --talosconfig talosconfig kubeconfig .

5 - Hetzner

Upload image

Hetzner Cloud does not support uploading custom images. You can email their support to get a Talos ISO uploaded by following issues:3599 or you can prepare image snapshot by yourself.

There are two options to upload your own.

- Run an instance in rescue mode and replase the system OS with the Talos image

- Use Hashicorp packer to prepare an image

Rescue mode

Create a new Server in the Hetzner console.

Enable the Hetzner Rescue System for this server and reboot.

Upon a reboot, the server will boot a special minimal Linux distribution designed for repair and reinstall.

Once running, login to the server using ssh to prepare the system disk by doing the following:

# Check that you in Rescue mode

df

### Result is like:

# udev 987432 0 987432 0% /dev

# 213.133.99.101:/nfs 308577696 247015616 45817536 85% /root/.oldroot/nfs

# overlay 995672 8340 987332 1% /

# tmpfs 995672 0 995672 0% /dev/shm

# tmpfs 398272 572 397700 1% /run

# tmpfs 5120 0 5120 0% /run/lock

# tmpfs 199132 0 199132 0% /run/user/0

# Download the Talos image

cd /tmp

wget -O /tmp/talos.raw.xz https://github.com/siderolabs/talos/releases/download/v0.13.0/hcloud-amd64.raw.xz

# Replace system

xz -d -c /tmp/talos.raw.xz | dd of=/dev/sda && sync

# shutdown the instance

shutdown -h now

To make sure disk content is consistent, it is recommended to shut the server down before taking an image (snapshot). Once shutdown, simply create an image (snapshot) from the console. You can now use this snapshot to run Talos on the cloud.

Packer

Install packer to the local machine.

Create a config file for packer to use:

# hcloud.pkr.hcl

packer {

required_plugins {

hcloud = {

version = ">= 1.0.0"

source = "github.com/hashicorp/hcloud"

}

}

}

variable "talos_version" {

type = string

default = "v0.13.0"

}

locals {

image = "https://github.com/siderolabs/talos/releases/download/${var.talos_version}/hcloud-amd64.raw.xz"

}

source "hcloud" "talos" {

rescue = "linux64"

image = "debian-11"

location = "hel1"

server_type = "cx11"

ssh_username = "root"

snapshot_name = "talos system disk"

snapshot_labels = {

type = "infra",

os = "talos",

version = "${var.talos_version}",

}

}

build {

sources = ["source.hcloud.talos"]

provisioner "shell" {

inline = [

"apt-get install -y wget",

"wget -O /tmp/talos.raw.xz ${local.image}",

"xz -d -c /tmp/talos.raw.xz | dd of=/dev/sda && sync",

]

}

}

Create a new image by issuing the commands shown below. Note that to create a new API token for your Project, switch into the Hetzner Cloud Console choose a Project, go to Access → Security, and create a new token.

# First you need set API Token

export HCLOUD_TOKEN=${TOKEN}

# Upload image

packer init .

packer build .

# Save the image ID

export IMAGE_ID=<image-id-in-packer-output>

After doing this, you can find the snapshot in the console interface.

Creating a Cluster via the CLI

This section assumes you have the hcloud console utility on your local machine.

# Set hcloud context and api key

hcloud context create talos-tutorial

Create a Load Balancer

Create a load balancer by issuing the commands shown below. Save the IP/DNS name, as this info will be used in the next step.

hcloud load-balancer create --name controlplane --network-zone eu-central --type lb11 --label 'type=controlplane'

### Result is like:

# LoadBalancer 484487 created

# IPv4: 49.12.X.X

# IPv6: 2a01:4f8:X:X::1

hcloud load-balancer add-service controlplane \

--listen-port 6443 --destination-port 6443 --protocol tcp

hcloud load-balancer add-target controlplane \

--label-selector 'type=controlplane'

Create the Machine Configuration Files

Generating Base Configurations

Using the IP/DNS name of the loadbalancer created earlier, generate the base configuration files for the Talos machines by issuing:

$ talosctl gen config talos-k8s-hcloud-tutorial https://<load balancer IP or DNS>:6443

created controlplane.yaml

created worker.yaml

created talosconfig

At this point, you can modify the generated configs to your liking.

Optionally, you can specify --config-patch with RFC6902 jsonpatches which will be applied during the config generation.

Validate the Configuration Files

Validate any edited machine configs with:

$ talosctl validate --config controlplane.yaml --mode cloud

controlplane.yaml is valid for cloud mode

$ talosctl validate --config worker.yaml --mode cloud

worker.yaml is valid for cloud mode

Create the Servers

We can now create our servers.

Note that you can find IMAGE_ID in the snapshot section of the console: https://console.hetzner.cloud/projects/$PROJECT_ID/servers/snapshots.

Create the Control Plane Nodes

Create the control plane nodes with:

export IMAGE_ID=<your-image-id>

hcloud server create --name talos-control-plane-1 \

--image ${IMAGE_ID} \

--type cx21 --location hel1 \

--label 'type=controlplane' \

--user-data-from-file controlplane.yaml

hcloud server create --name talos-control-plane-2 \

--image ${IMAGE_ID} \

--type cx21 --location fsn1 \

--label 'type=controlplane' \

--user-data-from-file controlplane.yaml

hcloud server create --name talos-control-plane-3 \

--image ${IMAGE_ID} \

--type cx21 --location nbg1 \

--label 'type=controlplane' \

--user-data-from-file controlplane.yaml

Create the Worker Nodes

Create the worker nodes with the following command, repeating (and incrementing the name counter) as many times as desired.

hcloud server create --name talos-worker-1 \

--image ${IMAGE_ID} \

--type cx21 --location hel1 \

--label 'type=worker' \

--user-data-from-file worker.yaml

Bootstrap Etcd

To configure talosctl we will need the first control plane node’s IP.

This can be found by issuing:

hcloud server list | grep talos-control-plane

Set the endpoints and nodes for your talosconfig with:

talosctl --talosconfig talosconfig config endpoint <control-plane-1-IP>

talosctl --talosconfig talosconfig config node <control-plane-1-IP>

Bootstrap etcd on the first control plane node with:

talosctl --talosconfig talosconfig bootstrap

Retrieve the kubeconfig

At this point we can retrieve the admin kubeconfig by running:

talosctl --talosconfig talosconfig kubeconfig .

6 - Nocloud

Talos supports nocloud data source implementation.

There are two ways to configure Talos server with nocloud platform:

- via SMBIOS “serial number” option

- using CDROM or USB-flash filesystem

SMBIOS Serial Number

This method requires the network connection to be up (e.g. via DHCP). Configuration is delivered from the HTTP server.

ds=nocloud-net;s=http://10.10.0.1/configs/;h=HOSTNAME

After the network initialization is complete, Talos fetches:

- the machine config from

http://10.10.0.1/configs/user-data - the network config (if available) from

http://10.10.0.1/configs/network-config

SMBIOS: QEMU

Add the following flag to qemu command line when starting a VM:

qemu-system-x86_64 \

...\

-smbios type=1,serial=ds=nocloud-net;s=http://10.10.0.1/configs/

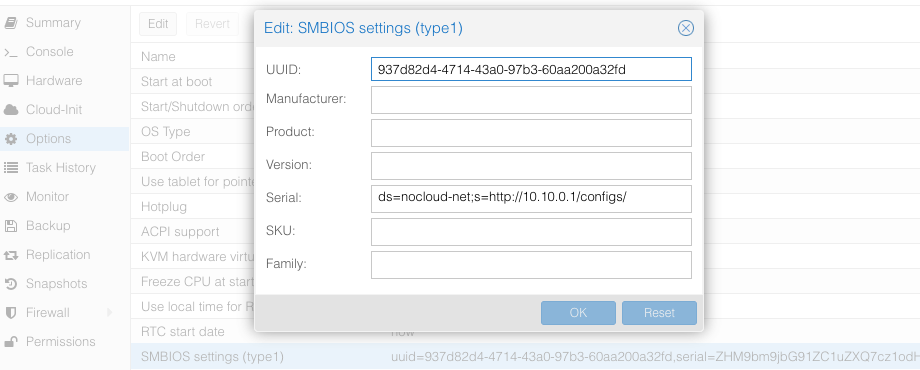

SMBIOS: Proxmox

Set the source machine config through the serial number on Proxmox GUI.

The Proxmox stores the VM config at /etc/pve/qemu-server/$ID.conf ($ID - VM ID number of virtual machine), you will see something like:

...

smbios1: uuid=ceae4d10,serial=ZHM9bm9jbG91ZC1uZXQ7cz1odHRwOi8vMTAuMTAuMC4xL2NvbmZpZ3Mv,base64=1

...

Where serial holds the base64-encoded string version of ds=nocloud-net;s=http://10.10.0.1/configs/.

CDROM/USB

Talos can also get machine config from local attached storage without any prior network connection being established.

You can provide configs to the server via files on a VFAT or ISO9660 filesystem.

The filesystem volume label must be cidata or CIDATA.

Example: QEMU

Create and prepare Talos machine config:

export CONTROL_PLANE_IP=192.168.1.10

talosctl gen config talos-nocloud https://$CONTROL_PLANE_IP:6443 --output-dir _out

Prepare cloud-init configs:

mkdir -p iso

mv _out/controlplane.yaml iso/user-data

echo "local-hostname: controlplane-1" > iso/meta-data

cat > iso/network-config << EOF

version: 1

config:

- type: physical

name: eth0

mac_address: "52:54:00:12:34:00"

subnets:

- type: static

address: 192.168.1.10

netmask: 255.255.255.0

gateway: 192.168.1.254

EOF

Create cloud-init iso image

cd iso && genisoimage -output cidata.iso -V cidata -r -J user-data meta-data network-config

Start the VM

qemu-system-x86_64 \

...

-cdrom iso/cidata.iso \

...

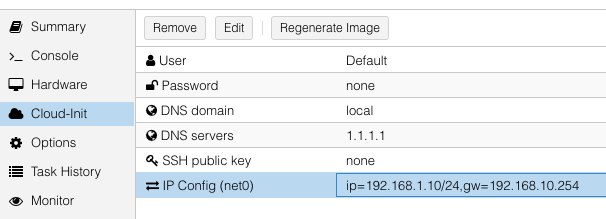

Example: Proxmox

Proxmox can create cloud-init disk for you. Edit the cloud-init config information in Proxmox as follows, substitute your own information as necessary:

and then update cicustom param at /etc/pve/qemu-server/$ID.conf.

cicustom: user=local:snippets/master-1.yml

ipconfig0: ip=192.168.1.10/24,gw=192.168.10.254

nameserver: 1.1.1.1

searchdomain: local

Note:

snippets/master-1.ymlis Talos machine config. It is usually located at/var/lib/vz/snippets/master-1.yml. This file must be placed to this path manually, as Proxmox does not support snippet uploading via API/GUI.

Click on Regenerate Image button after the above changes are made.

7 - OpenStack

Creating a Cluster via the CLI

In this guide, we will create an HA Kubernetes cluster in OpenStack with 1 worker node. We will assume an existing some familiarity with OpenStack. If you need more information on OpenStack specifics, please see the official OpenStack documentation.

Environment Setup

You should have an existing openrc file. This file will provide environment variables necessary to talk to your OpenStack cloud. See here for instructions on fetching this file.

Create the Image

First, download the OpenStack image from a Talos release.

These images are called openstack-$ARCH.tar.gz.

Untar this file with tar -xvf openstack-$ARCH.tar.gz.

The resulting file will be called disk.raw.

Upload the Image

Once you have the image, you can upload to OpenStack with:

openstack image create --public --disk-format raw --file disk.raw talos

Network Infrastructure

Load Balancer and Network Ports

Once the image is prepared, you will need to work through setting up the network. Issue the following to create a load balancer, the necessary network ports for each control plane node, and associations between the two.

Creating loadbalancer:

# Create load balancer, updating vip-subnet-id if necessary

openstack loadbalancer create --name talos-control-plane --vip-subnet-id public

# Create listener

openstack loadbalancer listener create --name talos-control-plane-listener --protocol TCP --protocol-port 6443 talos-control-plane

# Pool and health monitoring

openstack loadbalancer pool create --name talos-control-plane-pool --lb-algorithm ROUND_ROBIN --listener talos-control-plane-listener --protocol TCP

openstack loadbalancer healthmonitor create --delay 5 --max-retries 4 --timeout 10 --type TCP talos-control-plane-pool

Creating ports:

# Create ports for control plane nodes, updating network name if necessary

openstack port create --network shared talos-control-plane-1

openstack port create --network shared talos-control-plane-2

openstack port create --network shared talos-control-plane-3

# Create floating IPs for the ports, so that you will have talosctl connectivity to each control plane

openstack floating ip create --port talos-control-plane-1 public

openstack floating ip create --port talos-control-plane-2 public

openstack floating ip create --port talos-control-plane-3 public

Note: Take notice of the private and public IPs associated with each of these ports, as they will be used in the next step. Additionally, take node of the port ID, as it will be used in server creation.

Associate port’s private IPs to loadbalancer:

# Create members for each port IP, updating subnet-id and address as necessary.

openstack loadbalancer member create --subnet-id shared-subnet --address <PRIVATE IP OF talos-control-plane-1 PORT> --protocol-port 6443 talos-control-plane-pool

openstack loadbalancer member create --subnet-id shared-subnet --address <PRIVATE IP OF talos-control-plane-2 PORT> --protocol-port 6443 talos-control-plane-pool

openstack loadbalancer member create --subnet-id shared-subnet --address <PRIVATE IP OF talos-control-plane-3 PORT> --protocol-port 6443 talos-control-plane-pool

Security Groups

This example uses the default security group in OpenStack. Ports have been opened to ensure that connectivity from both inside and outside the group is possible. You will want to allow, at a minimum, ports 6443 (Kubernetes API server) and 50000 (Talos API) from external sources. It is also recommended to allow communication over all ports from within the subnet.

Cluster Configuration

With our networking bits setup, we’ll fetch the IP for our load balancer and create our configuration files.

LB_PUBLIC_IP=$(openstack loadbalancer show talos-control-plane -f json | jq -r .vip_address)

talosctl gen config talos-k8s-openstack-tutorial https://${LB_PUBLIC_IP}:6443

Additionally, you can specify --config-patch with RFC6902 jsonpatch which will be applied during the config generation.

Compute Creation

We are now ready to create our OpenStack nodes.

Create control plane:

# Create control planes 2 and 3, substituting the same info.

for i in $( seq 1 3 ); do

openstack server create talos-control-plane-$i --flavor m1.small --nic port-id=talos-control-plane-$i --image talos --user-data /path/to/controlplane.yaml

done

Create worker:

# Update network name as necessary.

openstack server create talos-worker-1 --flavor m1.small --network shared --image talos --user-data /path/to/worker.yaml

Note: This step can be repeated to add more workers.

Bootstrap Etcd

You should now be able to interact with your cluster with talosctl.

We will use one of the floating IPs we allocated earlier.

It does not matter which one.

Set the endpoints and nodes:

talosctl --talosconfig talosconfig config endpoint <control plane 1 IP>

talosctl --talosconfig talosconfig config node <control plane 1 IP>

Bootstrap etcd:

talosctl --talosconfig talosconfig bootstrap

Retrieve the kubeconfig

At this point we can retrieve the admin kubeconfig by running:

talosctl --talosconfig talosconfig kubeconfig .

8 - Scaleway

Talos is known to work on scaleway.com; however, it is currently undocumented.

9 - UpCloud

Talos is known to work on UpCloud.com; however, it is currently undocumented.

10 - Vultr

Talos is known to work on Vultr.com; however, it is currently undocumented.