This is the multi-page printable view of this section. Click here to print.

Introduction

1 - What is Talos?

Talos is a container optimized Linux distro; a reimagining of Linux for distributed systems such as Kubernetes. Designed to be as minimal as possible while still maintaining practicality. For these reasons, Talos has a number of features unique to it:

- it is immutable

- it is atomic

- it is ephemeral

- it is minimal

- it is secure by default

- it is managed via a single declarative configuration file and gRPC API

Talos can be deployed on container, cloud, virtualized, and bare metal platforms.

Why Talos

In having less, Talos offers more. Security. Efficiency. Resiliency. Consistency.

All of these areas are improved simply by having less.

2 - Quickstart

Local Docker Cluster

The easiest way to try Talos is by using the CLI (talosctl) to create a cluster on a machine with docker installed.

Prerequisites

talosctl

Download talosctl (macOS or Linux):

brew install siderolabs/tap/talosctl

kubectl

Download kubectl via one of methods outlined in the documentation.

Create the Cluster

Now run the following:

talosctl cluster create

Note

If you are using Docker Desktop on a macOS computer you will need to enable the default Docker socket in your settings.You can explore using Talos API commands:

talosctl dashboard --nodes 10.5.0.2

Verify that you can reach Kubernetes:

kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

talos-default-controlplane-1 Ready master 115s v1.27.4 10.5.0.2 <none> Talos (v1.4.8) <host kernel> containerd://1.5.5

talos-default-worker-1 Ready <none> 115s v1.27.4 10.5.0.3 <none> Talos (v1.4.8) <host kernel> containerd://1.5.5

Destroy the Cluster

When you are all done, remove the cluster:

talosctl cluster destroy

3 - Getting Started

This document will walk you through installing a full Talos Cluster. If this is your first use of Talos Linux, we recommend the Quickstart first, to quickly create a local virtual cluster on your workstation.

Regardless of where you run Talos, in general you need to:

- acquire the installation image

- decide on the endpoint for Kubernetes

- optionally create a load balancer

- configure Talos

- configure

talosctl - bootstrap Kubernetes

Prerequisites

talosctl

talosctl is a CLI tool which interfaces with the Talos API in

an easy manner.

Install talosctl before continuing:

curl -sL https://talos.dev/install | sh

Acquire the installation image

The most general way to install Talos is to use the ISO image (note there are easier methods for some platforms, such as pre-built AMIs for AWS - check the specific Installation Guides.)

The latest ISO image can be found on the Github Releases page:

- X86: https://github.com/siderolabs/talos/releases/download/v1.4.8/talos-amd64.iso

- ARM64: https://github.com/siderolabs/talos/releases/download/v1.4.8/talos-arm64.iso

When booted from the ISO, Talos will run in RAM, and will not install itself until it is provided a configuration. Thus, it is safe to boot the ISO onto any machine.

Alternative Booting

For network booting and self-built media, you can use the published kernel and initramfs images:

Note that to use alternate booting, there are a number of required kernel parameters. Please see the kernel docs for more information.

Decide the Kubernetes Endpoint

In order to configure Kubernetes, Talos needs to know what the endpoint (DNS name or IP address) of the Kubernetes API Server will be.

The endpoint should be the fully-qualified HTTP(S) URL for the Kubernetes API Server, which (by default) runs on port 6443 using HTTPS.

Thus, the format of the endpoint may be something like:

https://192.168.0.10:6443https://kube.mycluster.mydomain.com:6443https://[2001:db8:1234::80]:6443

The Kubernetes API Server endpoint, in order to be highly available, should be configured in a way that functions off all available control plane nodes. There are three common ways to do this:

Dedicated Load-balancer

If you are using a cloud provider or have your own load-balancer (such as HAProxy, nginx reverse proxy, or an F5 load-balancer), using a dedicated load balancer is a natural choice. Create an appropriate frontend matching the endpoint, and point the backends at the addresses of each of the Talos control plane nodes. (Note that given we have not yet created the control plane nodes, the IP addresses of the backends may not be known yet. We can bind the backends to the frontend at a later point.)

Layer 2 Shared IP

Talos has integrated support for serving Kubernetes from a shared/virtual IP address. This method relies on Layer 2 connectivity between control plane Talos nodes.

In this case, we choose an unused IP address on the same subnet as the Talos control plane nodes. For instance, if your control plane node IPs are:

- 192.168.0.10

- 192.168.0.11

- 192.168.0.12

you could choose the ip 192.168.0.15 as your shared IP address.

(Make sure that 192.168.0.15 is not used by any other machine and that your DHCP server

will not serve it to any other machine.)

Once chosen, form the full HTTPS URL from this IP:

https://192.168.0.15:6443

If you create a DNS record for this IP, note you will need to use the IP address itself, not the DNS name, to configure the shared IP (machine.network.interfaces[].vip.ip) in the Talos configuration.

For more information about using a shared IP, see the related Guide

DNS records

You can use DNS records to provide a measure of redundancy. In this case, you would add multiple A or AAAA records (one for each control plane node) to a DNS name.

For instance, you could add:

kube.cluster1.mydomain.com IN A 192.168.0.10

kube.cluster1.mydomain.com IN A 192.168.0.11

kube.cluster1.mydomain.com IN A 192.168.0.12

Then, your endpoint would be:

https://kube.cluster1.mydomain.com:6443

Decide how to access the Talos API

Many administrative tasks are performed by calling the Talos API on Talos Linux control plane nodes.

We recommend directly accessing the control plane nodes from the talosctl client, if possible (i.e. set your endpoints to the IP addresses of the control plane nodes).

This requires your control plane nodes to be reachable from the client IP.

If the control plane nodes are not directly reachable from the workstation where you run talosctl, then configure a load balancer for TCP port 50000 to be forwarded to the control plane nodes.

Do not use Talos Linux’s built in VIP support for accessing the Talos API, as it will not function in the event of an etcd failure, and you will not be able to access the Talos API to fix things.

If you create a load balancer to forward the Talos API calls, make a note of the IP or

hostname so that you can configure your talosctl tool’s endpoints below.

Configure Talos

When Talos boots without a configuration, such as when using the Talos ISO, it enters a limited maintenance mode and waits for a configuration to be provided.

In other installation methods, a configuration can be passed in on boot.

For example, Talos can be booted with the talos.config kernel

commandline argument set to an HTTP(s) URL from which it should receive its

configuration.

Where a PXE server is available, this is much more efficient than

manually configuring each node.

If you do use this method, note that Talos requires a number of other

kernel commandline parameters.

See required kernel parameters.

If creating EC2 kubernetes clusters, the configuration file can be passed in as --user-data to the aws ec2 run-instances command.

In any case, we need to generate the configuration which is to be provided. We start with generating a secrets bundle which should be saved in a secure location and used to generate machine or client configuration at any time:

talosctl gen secrets -o secrets.yaml

Now, we can generate the machine configuration for each node:

talosctl gen config --with-secrets secrets.yaml <cluster-name> <cluster-endpoint>

Here, cluster-name is an arbitrary name for the cluster, used

in your local client configuration as a label.

It should be unique in the configuration on your local workstation.

The cluster-endpoint is the Kubernetes Endpoint you

selected from above.

This is the Kubernetes API URL, and it should be a complete URL, with https://

and port.

(The default port is 6443, but you may have configured your load balancer to forward a different port.)

For example:

$ talosctl gen config --with-secrets secrets.yaml my-cluster https://192.168.64.15:6443

generating PKI and tokens

created /Users/taloswork/controlplane.yaml

created /Users/taloswork/worker.yaml

created /Users/taloswork/talosconfig

When you run this command, a number of files are created in your current directory:

controlplane.yamlworker.yamltalosconfig

The .yaml files are Machine Configs.

They provide Talos Linux servers their complete configuration,

describing everything from what disk Talos should be installed on, to network settings.

The controlplane.yaml file describes how Talos should form a Kubernetes cluster.

The talosconfig file (which is also YAML) is your local client configuration file.

Controlplane and Worker

The two types of Machine Configs correspond to the two roles of Talos nodes, control plane (which run both the Talos and Kubernetes control planes) and worker nodes (which run the workloads).

The main difference between Controlplane Machine Config files and Worker Machine Config files is that the former contains information about how to form the Kubernetes cluster.

Modifying the Machine configs

The generated Machine Configs have defaults that work for many cases.

They use DHCP for interface configuration, and install to /dev/sda.

If the defaults work for your installation, you may use them as is.

Sometimes, you will need to modify the generated files so they work with your systems. A common example is needing to change the default installation disk. If you try to to apply the machine config to a node, and get an error like the below, you need to specify a different installation disk:

$ talosctl apply-config --insecure -n 192.168.64.8 --file controlplane.yaml

error applying new configuration: rpc error: code = InvalidArgument desc = configuration validation failed: 1 error occurred:

* specified install disk does not exist: "/dev/sda"

You can verify which disks your nodes have by using the talosctl disks --insecure command.

Insecure mode is needed at this point as the PKI infrastructure has not yet been set up.

For example:

$ talosctl -n 192.168.64.8 disks --insecure

DEV MODEL SERIAL TYPE UUID WWID MODALIAS NAME SIZE BUS_PATH

/dev/vda - - HDD - - virtio:d00000002v00001AF4 - 69 GB /pci0000:00/0000:00:06.0/virtio2/

In this case, you would modiy the controlplane.yaml and worker.yaml and edit the line:

install:

disk: /dev/sda # The disk used for installations.

to reflect vda instead of sda.

Customizing Machine Configuration

The generated machine configuration provides sane defaults for most cases, but machine configuration can be modified to fit specific needs.

Some machine configuration options are available as flags for the talosctl gen config command,

for example setting a specific Kubernetes version:

talosctl gen config --with-secrets secrets.yaml --kubernetes-version 1.25.4 my-cluster https://192.168.64.15:6443

Other modifications are done with machine configuration patches.

Machine configuration patches can be applied with talosctl gen config command:

talosctl gen config --with-secrets secrets.yaml --config-patch-control-plane @cni.patch my-cluster https://192.168.64.15:6443

Note:

@cni.patchmeans that the patch is read from a file namedcni.patch.

Machine Configs as Templates

Individual machines may need different settings: for instance, each may have a different static IP address.

When different files are needed for machines of the same type, there are two supported flows:

- Use the

talosctl gen configcommand to generate a template, and then patch the template for each machine withtalosctl machineconfig patch. - Generate each machine configuration file separately with

talosctl gen configwhile applying patches.

For example, given a machine configuration patch which sets the static machine hostname:

# worker1.patch

machine:

network:

hostname: worker1

Either of the following commands will generate a worker machine configuration file with the hostname set to worker1:

$ talosctl gen config --with-secrets secrets.yaml my-cluster https://192.168.64.15:6443

created /Users/taloswork/controlplane.yaml

created /Users/taloswork/worker.yaml

created /Users/taloswork/talosconfig

$ talosctl machineconfig patch worker.yaml --patch @worker1.patch --output worker1.yaml

talosctl gen config --with-secrets secrets.yaml --config-patch-worker @worker1.patch --output-types worker -o worker1.yaml my-cluster https://192.168.64.15:6443

Apply Configuration

To apply the Machine Configs, you need to know the machines’ IP addresses.

Talos will print out the IP addresses of the machines on the console during the boot process:

[4.605369] [talos] task loadConfig (1/1): this machine is reachable at:

[4.607358] [talos] task loadConfig (1/1): 192.168.0.2

[4.608766] [talos] task loadConfig (1/1): server certificate fingerprint:

[4.611106] [talos] task loadConfig (1/1): xA9a1t2dMxB0NJ0qH1pDzilWbA3+DK/DjVbFaJBYheE=

[4.613822] [talos] task loadConfig (1/1):

[4.614985] [talos] task loadConfig (1/1): upload configuration using talosctl:

[4.616978] [talos] task loadConfig (1/1): talosctl apply-config --insecure --nodes 192.168.0.2 --file <config.yaml>

[4.620168] [talos] task loadConfig (1/1): or apply configuration using talosctl interactive installer:

[4.623046] [talos] task loadConfig (1/1): talosctl apply-config --insecure --nodes 192.168.0.2 --mode=interactive

[4.626365] [talos] task loadConfig (1/1): optionally with node fingerprint check:

[4.628692] [talos] task loadConfig (1/1): talosctl apply-config --insecure --nodes 192.168.0.2 --cert-fingerprint 'xA9a1t2dMxB0NJ0qH1pDzilWbA3+DK/DjVbFaJBYheE=' --file <config.yaml>

If you do not have console access, the IP address may also be discoverable from your DHCP server.

Once you have the IP address, you can then apply the correct configuration.

talosctl apply-config --insecure \

--nodes 192.168.0.2 \

--file controlplane.yaml

The insecure flag is necessary because the PKI infrastructure has not yet been made available to the node. Note: the connection will be encrypted, it is just unauthenticated. If you have console access you can extract the server certificate fingerprint and use it for an additional layer of validation:

talosctl apply-config --insecure \

--nodes 192.168.0.2 \

--cert-fingerprint xA9a1t2dMxB0NJ0qH1pDzilWbA3+DK/DjVbFaJBYheE= \

--file cp0.yaml

Using the fingerprint allows you to be sure you are sending the configuration to the correct machine, but it is completely optional. After the configuration is applied to a node, it will reboot. Repeat this process for each of the nodes in your cluster.

Understand talosctl, endpoints and nodes

It is important to understand the concept of endpoints and nodes.

In short: endpoints are the nodes that talosctl sends commands to, but nodes are the nodes that the command operates on.

The endpoint will forward the command to the nodes, if needed.

Endpoints

Endpoints are the IP addresses to which the talosctl client directly talks.

These should be the set of control plane nodes, either directly or through a load balancer.

Each endpoint will automatically proxy requests destined to another node in the cluster. This means that you only need access to the control plane nodes in order to access the rest of the network.

talosctl will automatically load balance requests and fail over between all of your endpoints.

You can pass in --endpoints <IP Address1>,<IP Address2> as a comma separated list of IP/DNS addresses to the current talosctl command.

You can also set the endpoints in your talosconfig, by calling talosctl config endpoint <IP Address1> <IP Address2>.

Note: these are space separated, not comma separated.

As an example, if the IP addresses of our control plane nodes are:

- 192.168.0.2

- 192.168.0.3

- 192.168.0.4

We would set those in the talosconfig with:

talosctl --talosconfig=./talosconfig \

config endpoint 192.168.0.2 192.168.0.3 192.168.0.4

Nodes

The node is the target you wish to perform the API call on.

When specifying nodes, their IPs and/or hostnames are as seen by the endpoint servers, not as from the client. This is because all connections are proxied through the endpoints.

You may provide -n or --nodes to any talosctl command to supply the node or (comma-separated) nodes on which you wish to perform the operation.

For example, to see the containers running on node 192.168.0.200:

talosctl -n 192.168.0.200 containers

To see the etcd logs on both nodes 192.168.0.10 and 192.168.0.11:

talosctl -n 192.168.0.10,192.168.0.11 logs etcd

It is possible to set a default set of nodes in the talosconfig file, but our recommendation is to explicitly pass in the node or nodes to be operated on with each talosctl command.

For a more in-depth discussion of Endpoints and Nodes, please see talosctl.

Default configuration file

You can reference which configuration file to use directly with the --talosconfig parameter:

talosctl --talosconfig=./talosconfig \

--nodes 192.168.0.2 version

However, talosctl comes with tooling to help you integrate and merge this configuration into the default talosctl configuration file.

This is done with the merge option.

talosctl config merge ./talosconfig

This will merge your new talosconfig into the default configuration file ($XDG_CONFIG_HOME/talos/config.yaml), creating it if necessary.

Like Kubernetes, the talosconfig configuration files has multiple “contexts” which correspond to multiple clusters.

The <cluster-name> you chose above will be used as the context name.

Kubernetes Bootstrap

Bootstrapping your Kubernetes cluster with Talos is as simple as:

talosctl bootstrap --nodes 192.168.0.2

The bootstrap operation should only be called ONCE and only on a SINGLE control plane node!

The IP can be any of your control planes (or the loadbalancer, if used for the Talos API endpoint).

At this point, Talos will form an etcd cluster, generate all of the core Kubernetes assets, and start the Kubernetes control plane components.

After a few moments, you will be able to download your Kubernetes client configuration and get started:

talosctl kubeconfig

Running this command will add (merge) you new cluster into your local Kubernetes configuration.

If you would prefer the configuration to not be merged into your default Kubernetes configuration file, pass in a filename:

talosctl kubeconfig alternative-kubeconfig

You should now be able to connect to Kubernetes and see your nodes:

kubectl get nodes

And use talosctl to explore your cluster:

talosctl -n <NODEIP> dashboard

For a list of all the commands and operations that talosctl provides, see the CLI reference.

4 - System Requirements

Minimum Requirements

| Role | Memory | Cores | System Disk |

|---|---|---|---|

| Control Plane | 2 GiB | 2 | 10 GiB |

| Worker | 1 GiB | 1 | 10 GiB |

Recommended

| Role | Memory | Cores | System Disk |

|---|---|---|---|

| Control Plane | 4 GiB | 4 | 100 GiB |

| Worker | 2 GiB | 2 | 100 GiB |

These requirements are similar to that of Kubernetes.

Storage

Talos Linux itself only requires less than 100 MB of disk space, but the EPHEMERAL partition is used to store pulled images, container work directories, and so on. Thus a minimum is 10 GiB of disk space is required. 100 GiB is desired. Note, however, that because Talos Linux assumes complete control of the disk it is installed on, so that it can control the partition table for image based upgrades, you cannot partition the rest of the disk for use by workloads.

Thus it is recommended to install Talos Linux on a small, dedicated disk - using a Terabyte sized SSD for the Talos install disk would be wasteful. Sidero Labs recommends having separate disks (apart from the Talos install disk) to be used for storage.

5 - What's New in Talos 1.4

See also upgrade notes for important changes.

Interactive Dashboard

Talos now starts a text-based UI dashboard on virtual console /dev/tty2 and switches to it by default upon boot.

Kernel logs remain available on /dev/tty1.

To switch between virtual TTYs, use the Alt+F1 and Alt+F2 keys.

You can disable this new feature by setting the kernel parameter talos.dashboard.disabled=1.

The dashboard is disabled by default on SBCs to limit resource usage.

The output to the serial console is not affected by this change.

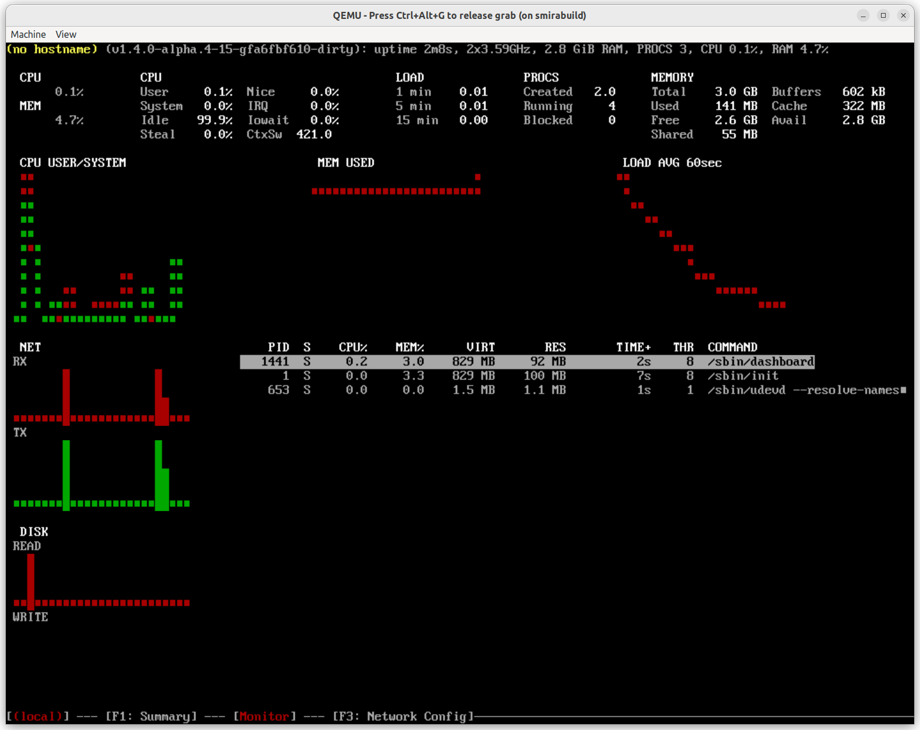

Interactive Dashboard on QEMU VM

Boot Process

Talos now ships with the latest Linux LTS kernel 6.1.x.

GRUB Menu Wipe Options

Talos ISO GRUB menu now an includes an option to wipe completely a Talos installed on a system disk.

Talos GRUB menu for a system disk boot now includes an option to wipe STATE and EPHEMERAL partition returning the

machine to the maintenance mode.

Kernel Modules

Talos now automatically loads kernel drivers built as modules. If any system extensions or the Talos base kernel build provides kernel modules and if they matches the system hardware (via PCI IDs), they will be loaded automatically. Modules can still be loaded explicitly by defining it in machine configuration.

At the moment only a small subset of device drivers is built as modules, but we plan to expand this list in the future.

Kernel Modules Tree

Talos now supports re-building the kernel modules dependency tree information on upgrades.

This allows modules of same name to co-exist as in-tree and external modules.

System Extensions can provide modules installed into extras directory and when loading it’ll take precedence over the in-tree module.

Kernel Argument talos.environment

Talos now supports passing environment variables via talos.environment kernel argument.

Example:

talos.environment=http_proxy=http://proxy.example.com:8080 talos.environment=https_proxy=http://proxy.example.com:8080

Kernel Argument talos.experimental.wipe

Talos now supports specifying a list of system partitions to be wiped in the talos.experimental.wipe kernel argument.

`talos.experimental.wipe=system:EPHEMERAL,STATE`

Networking

Bond Device Selectors

Bond links can now be described using device selectors instead of explicit device names:

machine:

network:

interfaces:

- interface: bond0

bond:

deviceSelectors:

- hardwareAddr: '00:50:56:*'

- hardwareAddr: '00:50:57:9c:2c:2d'

VLAN Machine Configuration

Strategic merge config patches now correctly support merging .vlans sections of the network interface.

talosctl CLI

talosctl etcd

Talos adds new APIs to make it easier to perform etcd maintenance operations.

These APIs are available via new talosctl etcd sub-commands:

talosctl etcd alarm list|disarmtalosctl etcd defragtalosctl etcd status

See also etcd maintenance guide.

talosctl containers

talosctl logs -k and talosctl containers -k now support and output container display names with their ids.

This allows to distinguish between containers with the same name.

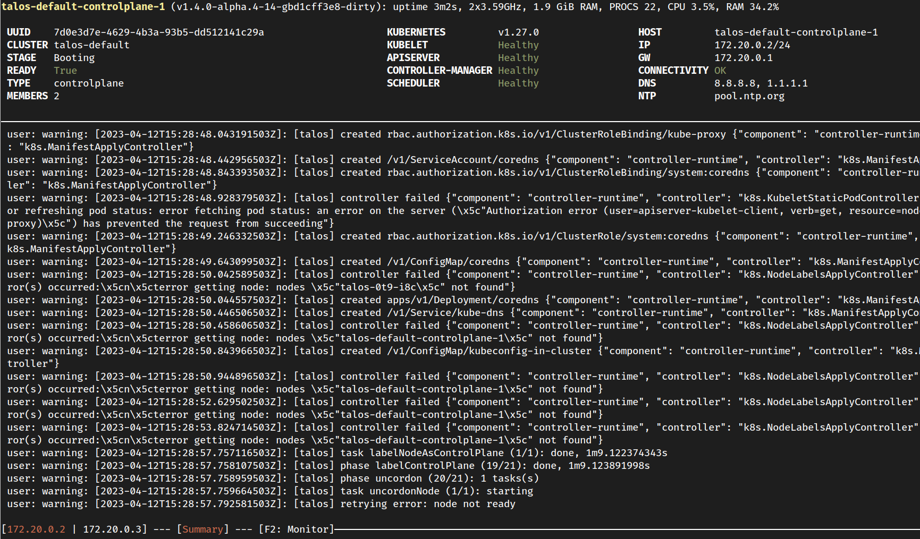

talosctl dashboard

A dashboard now shows same information as interactive console (see above), but in a remote way over the Talos API:

talosctl dashboard CLI

Previous monitoring screen can be accessed by using <F2> key.

talosctl logs

An issue was fixed which might lead to the log output corruption in the CLI under certain conditions.

talosctl netstat

Talos API was extended to support retrieving a list of network connections (sockets) from the node and pods.

talosctl netstat command was added to retrieve the list of network connections.

talosctl reset

Talos now supports resetting user disks through the Reset API,

the list of disks to wipe can be passed using the --user-disks-to-wipe flag to the talosctl reset command.

Miscellaneous

Registry Mirror Catch-All Option

Talos now supports a catch-all option for registry mirrors:

machine:

registries:

mirrors:

docker.io:

- https://registry-1.docker.io/

"*":

- https://my-registry.example.com/

Talos API os:operator role

Talos now supports a new os:operator role for the Talos API.

This role allows everything os:reader role allows plus access to maintenance APIs:

rebooting, shutting down a node, accessing packet capture, etcd alarm APIs, etcd backup, etc.

VMware Platform

Talos now supports loading network configuration on VMWare platform from the metadata key.

See CAPV IPAM Support and

Talos issue 6708 for details.

Component Updates

- Linux: 6.1.24

- containerd: v1.6.20

- runc: v1.1.5

- Kubernetes: v1.27.1

- etcd: v3.5.8

- CoreDNS: v1.10.1

- Flannel: v0.21.4

Talos is built with Go 1.20.3.

6 - Support Matrix

| Talos Version | 1.4 | 1.3 |

|---|---|---|

| Release Date | 2023-04-18 | 2022-12-15 (1.3.0) |

| End of Community Support | 1.5.0 release (2023-08-15) | 1.4.0 release (2023-04-18) |

| Enterprise Support | offered by Sidero Labs Inc. | offered by Sidero Labs Inc. |

| Kubernetes | 1.27, 1.26, 1.25 | 1.26, 1.25, 1.24 |

| Architecture | amd64, arm64 | amd64, arm64 |

| Platforms | ||

| - cloud | AWS, GCP, Azure, Digital Ocean, Exoscale, Hetzner, OpenStack, Oracle Cloud, Scaleway, Vultr, Upcloud | AWS, GCP, Azure, Digital Ocean, Exoscale, Hetzner, OpenStack, Oracle Cloud, Scaleway, Vultr, Upcloud |

| - bare metal | x86: BIOS, UEFI; arm64: UEFI; boot: ISO, PXE, disk image | x86: BIOS, UEFI; arm64: UEFI; boot: ISO, PXE, disk image |

| - virtualized | VMware, Hyper-V, KVM, Proxmox, Xen | VMware, Hyper-V, KVM, Proxmox, Xen |

| - SBCs | Banana Pi M64, Jetson Nano, Libre Computer Board ALL-H3-CC, Nano Pi R4S, Pine64, Pine64 Rock64, Radxa ROCK Pi 4c, Raspberry Pi 4B, Raspberry Pi Compute Module 4 | Banana Pi M64, Jetson Nano, Libre Computer Board ALL-H3-CC, Nano Pi R4S, Pine64, Pine64 Rock64, Radxa ROCK Pi 4c, Raspberry Pi 4B, Raspberry Pi Compute Module 4 |

| - local | Docker, QEMU | Docker, QEMU |

| Cluster API | ||

| CAPI Bootstrap Provider Talos | >= 0.6.0 | >= 0.5.6 |

| CAPI Control Plane Provider Talos | >= 0.4.10 | >= 0.4.10 |

| Sidero | >= 0.6.0 | >= 0.5.7 |

Platform Tiers

- Tier 1: Automated tests, high-priority fixes.

- Tier 2: Tested from time to time, medium-priority bugfixes.

- Tier 3: Not tested by core Talos team, community tested.

Tier 1

- Metal

- AWS

- GCP

Tier 2

- Azure

- Digital Ocean

- OpenStack

- VMWare

Tier 3

- Exoscale

- Hetzner

- nocloud

- Oracle Cloud

- Scaleway

- Vultr

- Upcloud